Jake Paul Faces a Wave of AI Deepfakes on TikTok and His Reaction Says It All

From makeup tutorials to 'coming out' clips, TikTok is awash with AI-generated videos of Jake Paul — and he’s finally speaking out about it. But what’s even stranger is how convincing they’ve become.

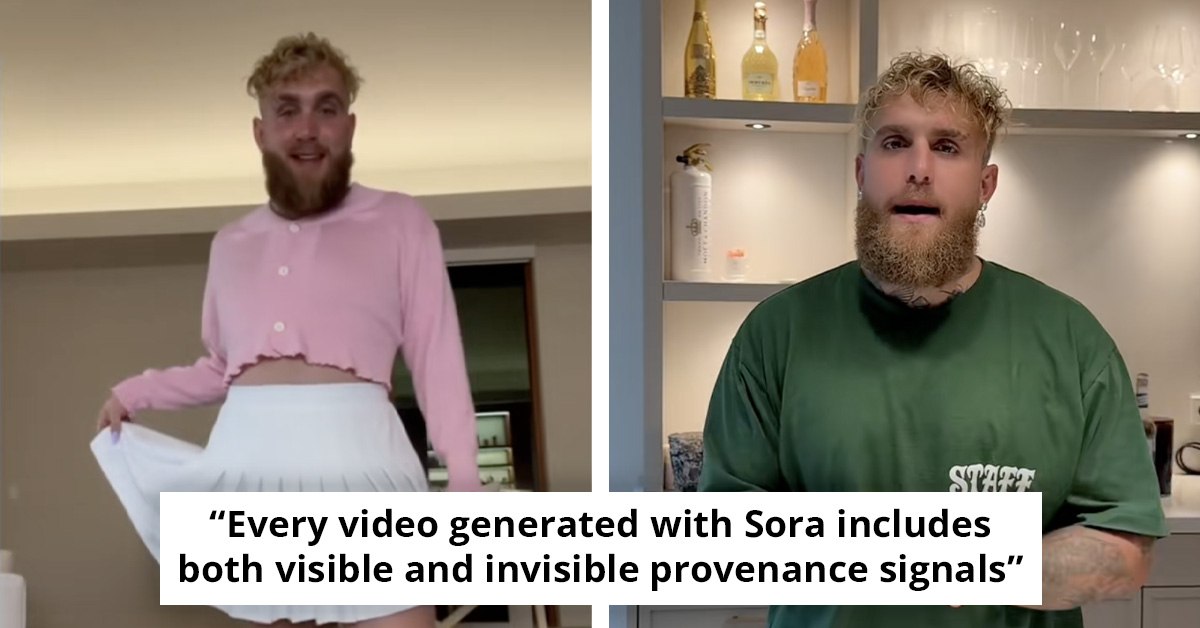

At first, it’s easy to laugh. A video of Jake Paul doing a makeup routine, another of him strutting in a skirt, and even one where he seems to share deeply personal news. It feels like just another day on TikTok — until you realize none of it is real.

The internet’s new obsession with AI deepfakes has crossed into unsettling territory. What started as a joke has turned into a digital mirror reflecting how blurred our sense of 'real' has become. Viewers scroll by, amused or confused, not realizing that a few pixels of distortion are the only clues separating reality from simulation.

Jake Paul, the YouTuber-turned-boxer known for controversy and control over his own narrative, now finds himself at the center of an AI circus he never agreed to join. And this time, the punchline isn’t his doing.

It’s a reminder of how fast technology can outpace our instincts — how a few clicks can build a fake version of someone that millions believe. As one clip glitches, revealing an extra finger, you can almost feel the eerie weight of the future pressing in: we’re watching entertainment evolve into impersonation.

Across TikTok, Users Are Remixing AI Models to Generate Uncanny Versions of Jake Paul.

These clips show him in everyday scenarios — trying on clothes, joking with fans, or even delivering heartfelt confessions. The catch? None of it actually happened.

The videos are created using advanced text-to-video platforms, reportedly including ChatGPT’s Sora. The technology uses real likenesses, voice training, and motion synthesis to create realistic human footage. And while these tools are meant to be used ethically, it’s clear many creators are stretching the limits.

Paul Has Now Spoken Out, Posting His Reaction Online. 'AI Is Getting Out of Hand,' He Said, Shaking His Head as a Friend Offered Him a Celsius.

Then, leaning into the absurdity, he mimicked the robotic voice from one of the viral clips.

In another post, he plays a snippet of the fake 'coming out' video for his fiancée, Jutta Leerdam. Her reaction reflects what many are thinking. 'I don’t like it; it’s not funny,' she tells him. 'People believe it.'

Experts have warned about this for years — that once deepfake technology becomes accessible, no one is safe from digital impersonation. Even Sora’s own team admitted, 'Every video generated with Sora includes both visible and invisible provenance signals,' along with watermarks meant to identify AI creations.

But Watermarks Only Help If People Notice Them.

Most viewers don’t. Instead, the clips spread quickly, amplified by curiosity and confusion.

And while Sora claims to block depictions of public figures unless they’ve given consent through Cameo-style uploads, the reality is that open-source models and cloning tools make it impossible to control every version floating around online.

For public figures like Paul, this raises deeper questions about consent, control, and reputation in the age of generative media. When anyone can recreate your face, how do you protect your voice?

What’s happening to Jake Paul might seem like internet chaos, but it’s also a glimpse into a future everyone will have to face. AI has turned creativity into something both thrilling and unnerving — a tool that can create art or erase authenticity.

The next time a familiar face pops up in your feed saying something unexpected, you might hesitate. You might look closer. And maybe that pause is the new digital literacy we all need.

Share this story with someone who still believes everything they see online — because in 2025, even disbelief needs an update!

The Reality of AI Deepfakes

Dr. Hany Farid, a professor at UC Berkeley and a leading expert on digital forensics, emphasizes the growing challenges posed by AI deepfakes in today's digital landscape. He notes that the technology's ability to create hyper-realistic content can significantly erode public trust in media and personal interactions.

As deepfakes become more prevalent, Farid suggests implementing rigorous verification processes and promoting digital literacy. He states, 'We need to educate users about the signs of manipulated content and encourage skepticism toward unverified media, especially on platforms like TikTok.'

The emergence of AI deepfakes raises significant ethical concerns, particularly regarding misinformation. A communication expert, Dr. David Broniatowski from George Washington University, has studied how deceptive media can manipulate public opinion. He asserts, 'When individuals encounter deepfakes, their perception of reality can be skewed, leading to a breakdown in trust among communities.'

To combat this, Broniatowski advises that social media platforms enhance their content moderation systems. He argues for transparency in algorithmic processes, allowing users to understand how content is curated and presented, ultimately fostering a more informed digital environment.

Psychological Insights & Implications

As the proliferation of AI deepfakes continues, it’s critical for both users and platforms to adapt. Dr. Claire Wardle, an expert in misinformation and co-founder of First Draft, emphasizes the importance of fostering a culture of verification. She recommends that educational initiatives focus on teaching users how to critically assess content, including checking for sources and context.

Wardle highlights that, 'By equipping individuals with the tools to discern fact from fiction, we can mitigate the negative impacts of deepfakes on society.' This proactive approach is vital for restoring public trust in digital content.